A lot of organizations and decision makers these days are facing challenging choices in their strategies, having to balance all the variables and unknowns linked to the current developments in AI (LLMs, GenAI, etc). It’s very easy to be overwhelmed, or get swayed by news and the pace of innovation.

This is very understandable: not having invested enough, not having the right skills and competences — and thus being beaten by competitors and new startups — is driving a lot of fear and it is leading to a lot of reactive strategies. This is nothing new in the space of tech and more broadly innovation, but it’s at the forefront these days in the field of AI given how quickly it happened and how fast money is being invested.

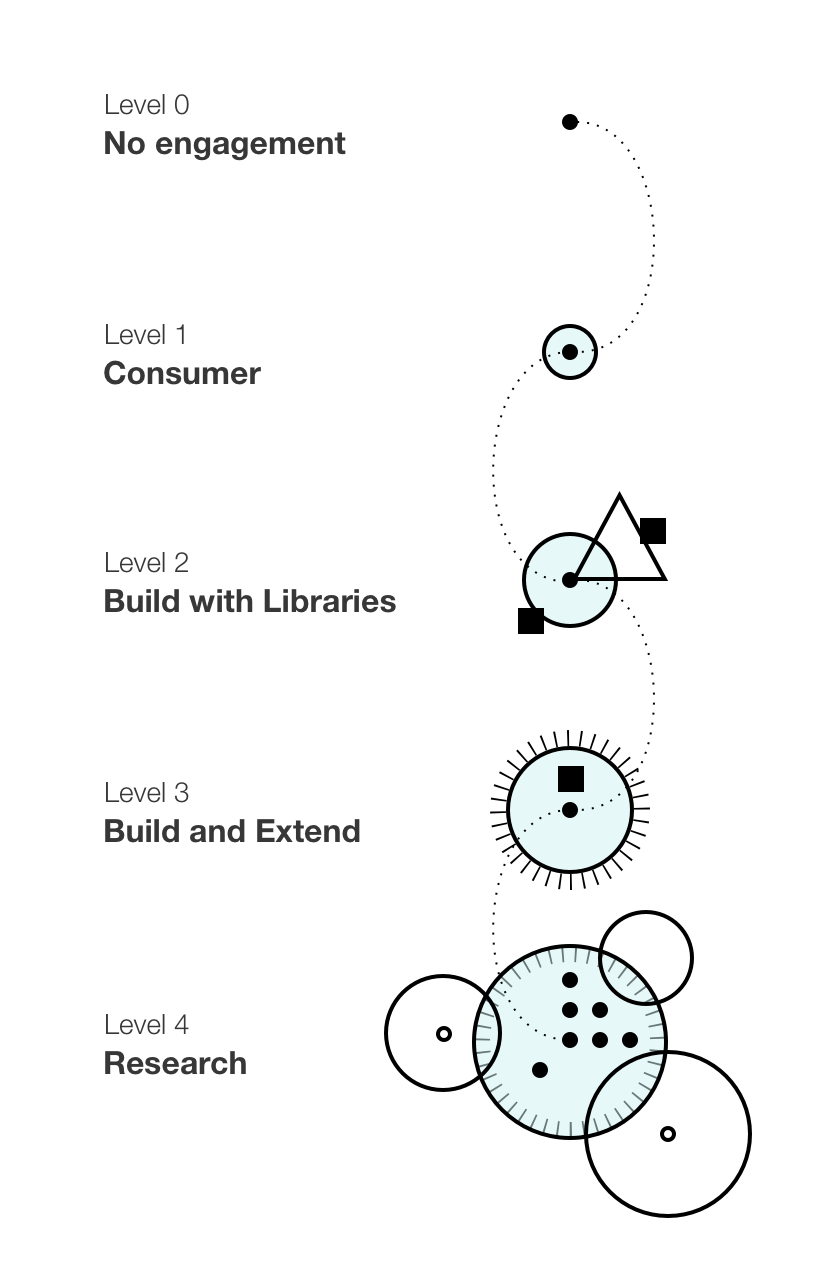

As different companies have different needs, it’s important to define different levels of engagement. The one I’m outlining here is a quite pragmatic one that I found effective in helping move forward strategic discussions. While not groundbreaking as it applies to many kinds of innovation, it’s still a solid foundation to start with. From here then different organizations can work to define their own goals, path, and level of granularity.

Levels:

- Level 0 — No engagement

- Level 1 — Consumer

- Level 2 — Build with Libraries

- Level 3 — Build and Extend

- Level 4 — Research

Level 0 — No engagement

The organization has decided to not use anything AI related. This includes both internal tooling for employees, as well as building anything in the product or services that the company delivers.

When is this a good choice?

It’s when after a thorough assessment and analysis the company has decided that the current AI tools have no benefit neither in the short nor long term, and similarly that no current AI tools exist in the market that can boost employee productivity.

It’s also possible that some companies might decide to wait for the market winning strategies to emerge and invest later to be more careful and efficient in investing.

What to pay attention to

- While this can be seen by some people as an organization being in denial, there can be reasons for this choice and I’d always inquire about these before making a judgement call. The important thing is that the decision was based on solid ground, and not by being reactive or skeptic at a personal level.

- Have periodic checks that this decision is still the right one.

Level 1 — Consumer

The organization has approved the use of new AI tools, like coding assistants, general LLMs, etc, for general use to employees. There’s however no active plan to include anything AI in the product or service of the organization.

When is this a good choice?

This is likely a good option for a lot of organizations, especially ones that don’t build software, as various tools are being rolled out and integrated in many productivity suites already being used.

What to pay attention to

- Keep focus on productivity, not adopting everything for the sake of adoption (but experimentation is good).

- Don’t just open access, but support the adoption with onboarding and proper learning documentation that is specific for the organization needs and ideally each individual role.

- Assessing risks is important, but try to strike a balance between the requirements of security and privacy, and the ability of employees to explore and adopt effective tools.

Skills needed

As this is at a more consumer level, it’s likely that all the tools introduced have their own learning and onboarding. As such, there aren’t major skills required. However, it’s important to have people inside the company that have a deeper practical understanding and can support the internal onboarding strategy.

Examples

- GitHub Copilot

- General use LLMs (i.e. Claude, Gemini, ChatGPT, …)

- Built-in augmentation of existing tools (i.e. Miro AI, …)

Level 2 — Build with Libraries

The organization has decided to incorporate some degree of AI tools into their products, specifically using existing products and libraries. In this scenario the product teams have identified good customer-centric use cases and have made a cost/benefit assessment and decided that existing libraries fulfill the core needs.

In this case we can either mean that external APIs are being used (i.e. a contract with a third party) or a library is deployed in-house and used largely as-is.

When is this a good choice?

There can be many reasons, but two are more common. In one case, the existing libraries are an ideal or almost ideal match for the customer use-case. In another case, the cost of developing a more custom solution, while potentially better, has a cost that can’t be justified.

What to pay attention to

- Take some time to build a prototype and test out the libraries for the actual design that is going to be delivered.

- Don’t get too deeply paired up with a specific library or API: build with the flexibility to switch to a different one later.

- Make sure that the extra features are properly isolated when built, and possibly discuss if it’s valuable to have a user-facing switch to turn it off (can be relevant for some users of companies to have it off for compliance reasons).

- Especially with LLM, be careful about content moderation for the output.

- Note that deploying LLM in-house compared to using a third-party API call has a whole different set of considerations cost-wise. That’s why there are solutions that host and run LLMs for you if you don’t want to use a specific one (i.e. Claude API, ChatGPT API, etc).

- The ethics of the company providing the API, and possible legal implications.

Skills needed

Everyone in the product side needs to know or learn how to build with the specific AI tool that has been identified. Product people need to get to know how it works in detail, designers to explore the specific design patterns and edge cases, and engineers explore libraries and integrations. Some general ML knowledge is ideal, but the libraries often provide enough guidance to implement them as the plan is to not have any heavy customization.

Examples

- Claude and other provider’s APIs

- Third-party LLM providers (i.e. Lambda AI, Groq AI, …)

- RAG libraries to optimize the LLM output (i.e. LlamaIndex)

- Open standards for interoperability (i.e. ONNX, …)

Level 3 — Build and Extend

The organization is including more R&D and composing existing approaches, for example using a foundational model and training it for a specific purpose. Libraries and APIs are likely still used, but there’s a deeper understanding of ML, AI in general, and the necessary tooling.

When is this a good choice?

This is ideal when there’s a competitive advantage in developing more custom solutions or that’s needed for the specific user need, and the cost/benefit analysis shows this to be the right option.

What to pay attention to

- Extending requires much deeper AI and ML expertise, so while specialists are good to have at previous levels, at this stage they are definitely a requirement.

- Assessing the cost/benefit can be difficult if there isn’t pre-existing expertise in the topic, both at strategic and implementation level.

- On-premise deployments are expensive and should be evaluated carefully against the target scale and performance of the platform, with attention to quantized models.

- The ethics of the data that created the foundation model used, and of any new training data used, and possible legal implications.

Skills needed

At this level it’s needed a deeper expertise in AI toolsets and theory, especially on the development side. Ideally not just developers but also adding data scientists with a specialization in this area.

Examples

- Hosted LLM providers (i.e. Lambda AI, RunPod…)

- ML libraries (i.e. TensorFlow, PyTorch, …)

- Orchestration of LLM (i.e. dstack, …)

Level 4 — Research

Not many organizations will be able to have the funding and skills to operate at this level. This is where the company is actively competing to develop new models and solutions, either in specialist fields (i.e. healthcare) or generic ones (i.e. generic agents). They are investing in the innovation of the AI field itself, and likely competing to get top AI talent.

These companies are the usual big names: Anthropic, OpenAI, DeepSeek, Google, Meta, etc.

When is this a good choice?

Unless the company is competing directly in pushing the boundaries and innovation in the AI field, it’s unlikely to be a good idea. As this is pure R&D work, with the current hardware and experts costs, it’s a very expensive bet and needs to be backed by a strategy that can afford to invest in it.

What to pay attention to

- The ethics of the data used, and possible legal implications.

- Expenses to build the infrastructure.

- Supply-chain planning to be able to deliver in time.

- Competitor innovation and announcements.

- Papers published by institutions and academies.

Skills needed

Best in class researchers, data scientists, engineers, with deep expertise in AI. Likely also PhD in the field or similar.

Identifying the right level of engagement, and where necessary a plan to move from one level to another is very important to cut through the noise of the hype cycles. The notes above try to summarize a very complex and evolving space: as such, it’s important to acknowledge there are limitations in such an abstraction, both in the summarization as well as in the level of knowledge needed.

At the same time, it provides a pragmatic scaffolding to ground medium to long term strategic work in the field. You can build on it.

Thanks to Erlend Davidson for reviewing the content.